The past year of graphics card reviews has been an exercise in dramatic asterisks, and for good reason. Nvidia and AMD have seen fit to ensure members of the press have access to new graphics cards ahead of their retail launches, which has placed us in a comfy position to praise each of their latest-gen offerings: good prices, tons of power.

Then we see our comment sections explode with unsatisfied customers wondering how the heck to actually buy them. I've since softened my tune on these pre-launch previews.

I say all of this up front about the Nvidia RTX 3060, going on sale today, February 25 (at 12pm ET, if you're interested in entering the day-one sales fray) because it's the first Nvidia GPU I've tested in a while to make my cautious stance easier. The company has been on a tear with its RTX 3000-series of cards in terms of sheer consumer value, particularly compared to equivalent prior-gen cards (the $1,499 RTX 3090 notwithstanding), but the $329 RTX 3060 (not to be confused with December's 3060 Ti) doesn't quite pull the same weight. It's a good 1080p card with 1440p room to flex, but it's not the next-gen jump in its Nvidia price category we've grown accustomed to.

Plus, unlike other modern Nvidia cards, this one lacks one particular backup reason to invest as heavily: cryptomining potential. (And that's intentional on Nvidia's part.)

Hopefully your plate's better than mine

The EVGA RTX 3060, as posed next to the larger RTX 3060 Ti Founders Edition.Sam Machkovech

The EVGA RTX 3060, as posed next to the larger RTX 3060 Ti Founders Edition.Sam Machkovech RTX 3060 glamour shot.Sam Machkovech

RTX 3060 glamour shot.Sam Machkovech EVGA's version opts for a flat, square back, as opposed to some sort of fancy cooling routing through a more carved or styled board.Sam Machkovech

EVGA's version opts for a flat, square back, as opposed to some sort of fancy cooling routing through a more carved or styled board.Sam Machkovech In order to install my review GPU into my testing rig, I had to remove this mounting plate.Sam Machkovech

In order to install my review GPU into my testing rig, I had to remove this mounting plate.Sam Machkovech A sampling of other RTX 3060 cards being sold by OEMs starting today.Nvidia

A sampling of other RTX 3060 cards being sold by OEMs starting today.Nvidia Little 3060.Nvidia

Little 3060.Nvidia White 3060.Nvidia

White 3060.Nvidia Larger white 3060.Nvidia

Larger white 3060.Nvidia Garish 3060.Nvidia

Garish 3060.Nvidia

Ahead of today's review, Nvidia provided Ars Technica with an EVGA version of the RTX 3060, since this model won't receive an Nvidia "Founders Edition" label (the first for any mainline RTX card). This 3060 variant keeps things simple in terms of construction, with two traditional, bottom-mounted fans and none of the "passthrough" fan construction found in Nvidia's FEs, and it isn't advertised with any particular supercharged cooling options. Despite the lack of clever fan construction, this model runs cool and quiet at stock clocks, never topping out above 65°C at full load.

Unfortunately for my testing rig, however, I had to take the unusual step of unscrewing EVGA's mounting plate so that it wouldn't block my ability to insert the GPU into my case. In my anecdotal case, this was the first time I've ever had to do this with a GPU I've reviewed or tested in my seven years at Ars (though admittedly, that has mostly been with Nvidia's and AMD's stock models, as opposed to a wide variety of OEMs).

Thankfully, I was still able to stably situate the EVGA GPU into my machine for testing purposes, at which point I began comparing it to the nearest-performance GPU I had in my possession: the RTX 2060 Super, released in July 2019 for $399. That model was a much-needed upgrade to the tepid RTX 2060, and it arrived as a good enough option for gamers who wanted robust 1080p performance across the board, along with plenty of 1440p options and a reasonable way to dip toes into the worlds of ray tracing and Nvidia's proprietary deep-learning super sampling (DLSS).

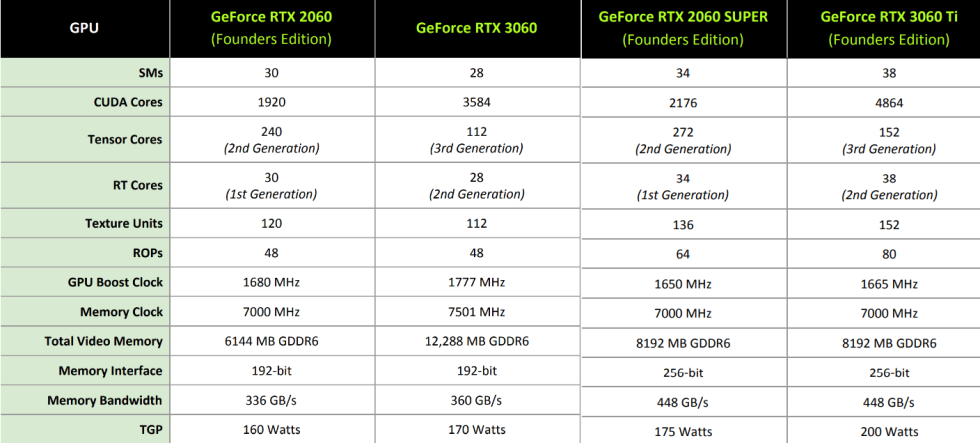

In my testing, I found that the "$70 cheaper" GPU (as priced in a magical, scalper-free marketplace) generally landed neck and neck with the July 2019 card, as opposed to blowing away the older model. This appears to boil down to the give-and-take nature of the two cards' spec tables. The RTX 3060 has over 150 percent of the 2060 Super's CUDA cores, along with 150 percent of the GDDR6 VRAM and a slight lead in core clocks. But its VRAM is definitely not the same kind, downgraded from a 256-bit bus to a 192-bit one with lower memory bandwidth. Plus, comparing each card's proprietary "RTX" potential by counting tensor cores and RT cores is tricky, since the RTX 3060 has fewer cores than the RTX 2060 Super in both counts, albeit "newer" generations of each.

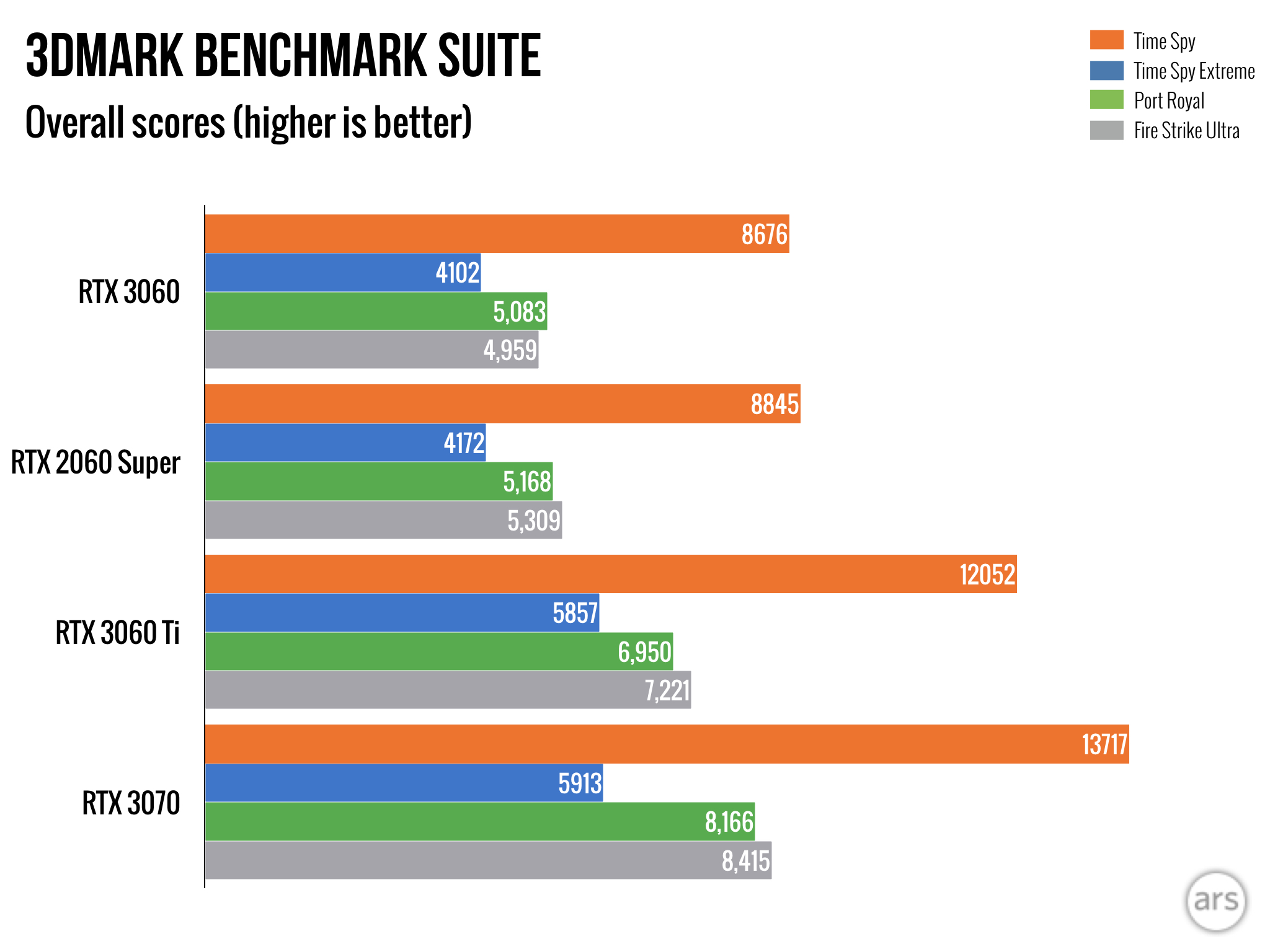

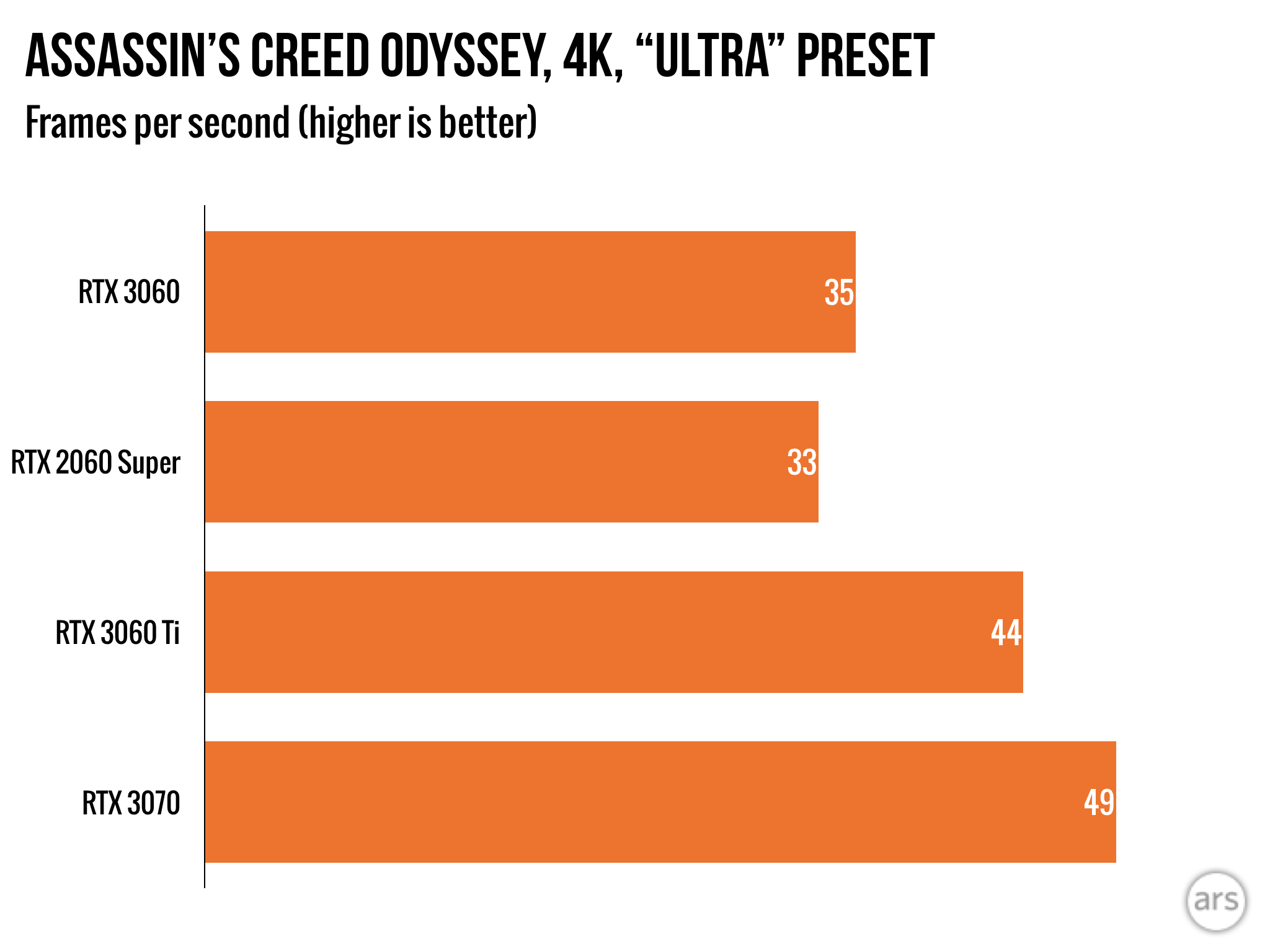

The RTX 3060 and the older RTX 2060 Super are neck and neck throughout this benchmark collection.

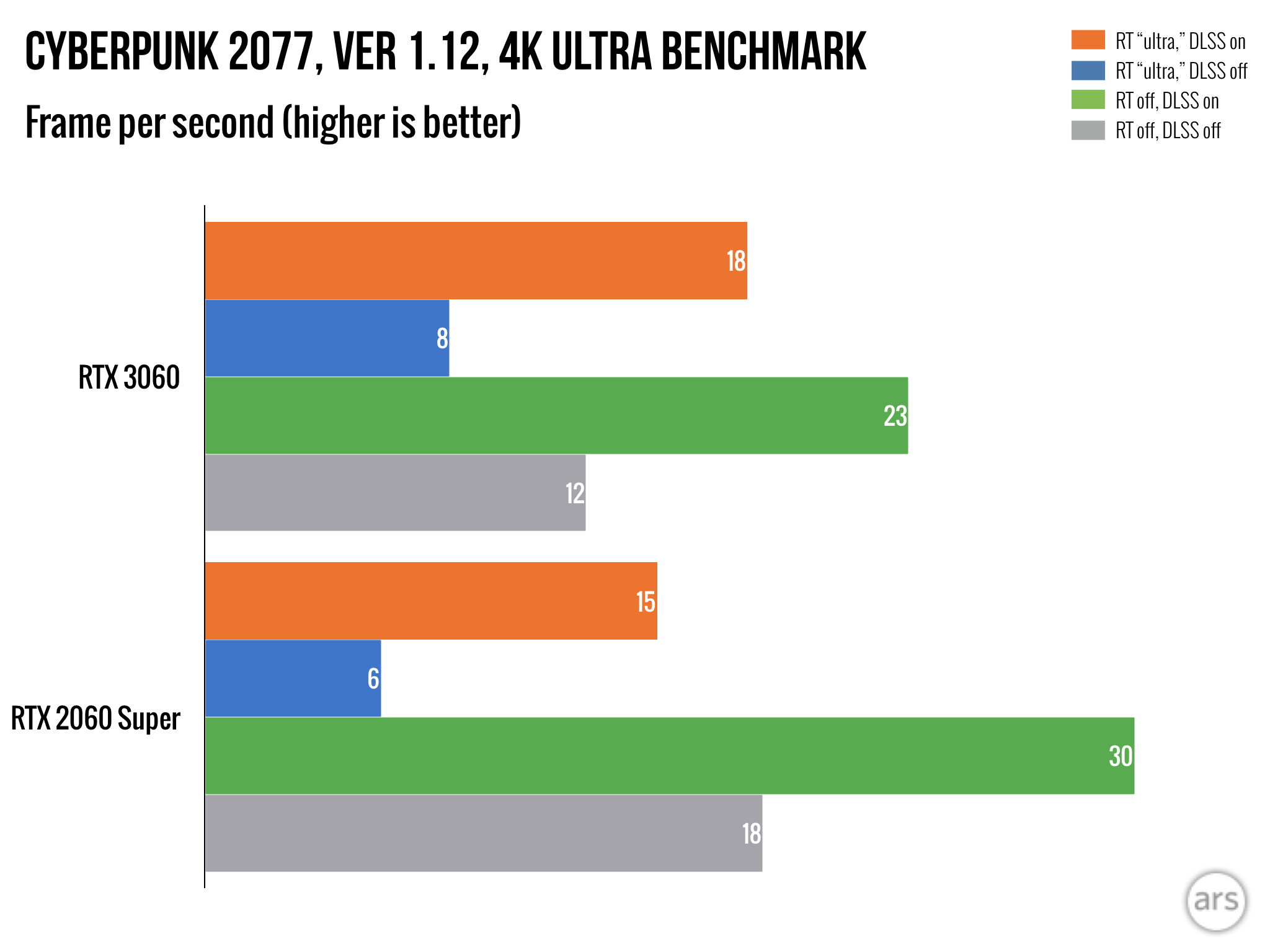

The RTX 3060 and the older RTX 2060 Super are neck and neck throughout this benchmark collection. This is a particularly punishing benchmark, meant to show percentage differences between models. And notice here, a divide emerges when RT is turned to max, versus when it's disabled entirely.

This is a particularly punishing benchmark, meant to show percentage differences between models. And notice here, a divide emerges when RT is turned to max, versus when it's disabled entirely. In Watch Dogs Legion's case, on the other hand, enabling and disabling RT didn't change the percentage differences between the RTX 3060 and the 2060 Super (not shown in this chart, apologies).

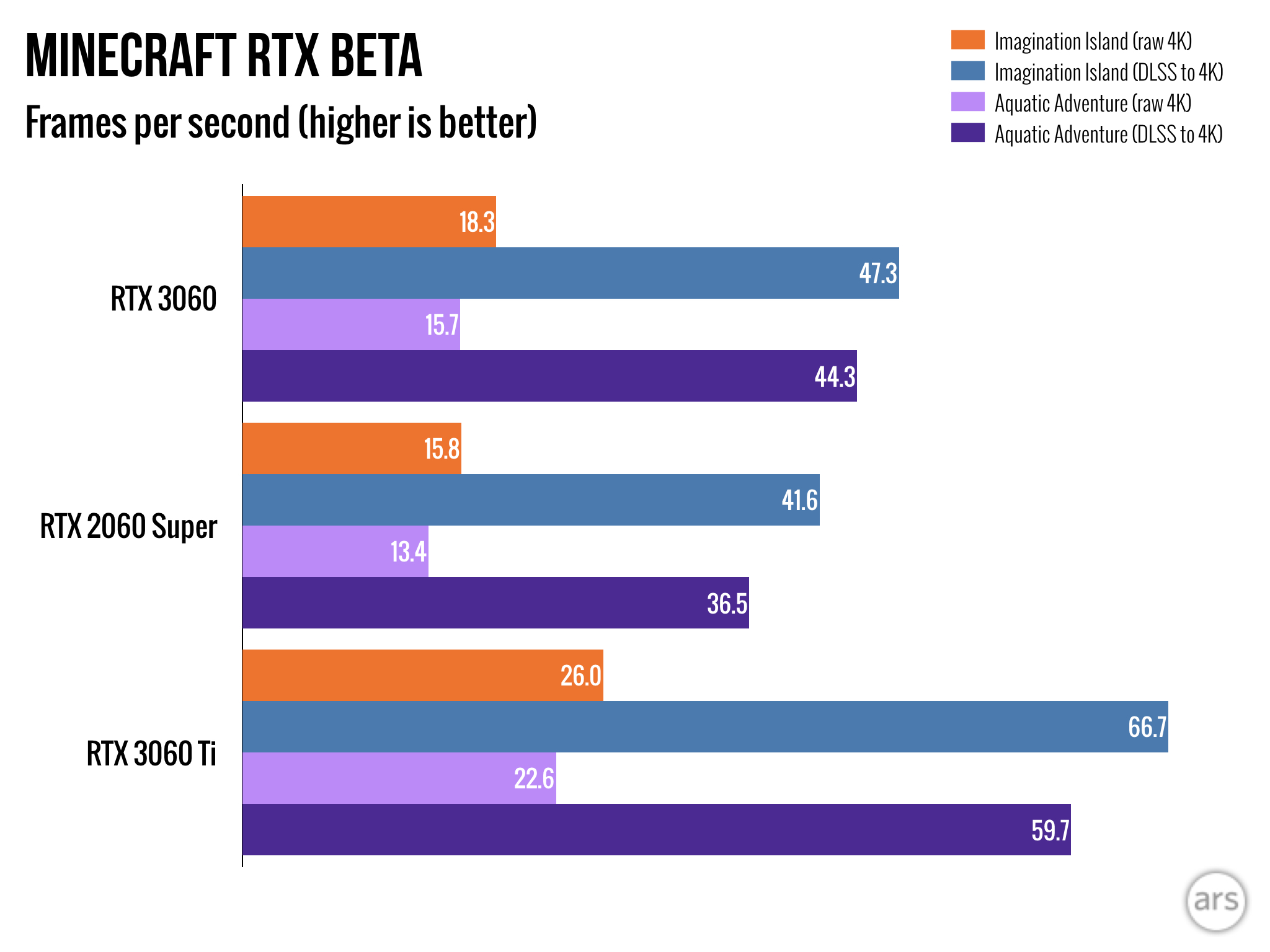

In Watch Dogs Legion's case, on the other hand, enabling and disabling RT didn't change the percentage differences between the RTX 3060 and the 2060 Super (not shown in this chart, apologies). Ray tracing enabled for all Minecraft tests.

Ray tracing enabled for all Minecraft tests. Ray tracing enabled for all Control tests.

Ray tracing enabled for all Control tests. The rest of the gallery's benchmarks do not include ray tracing.

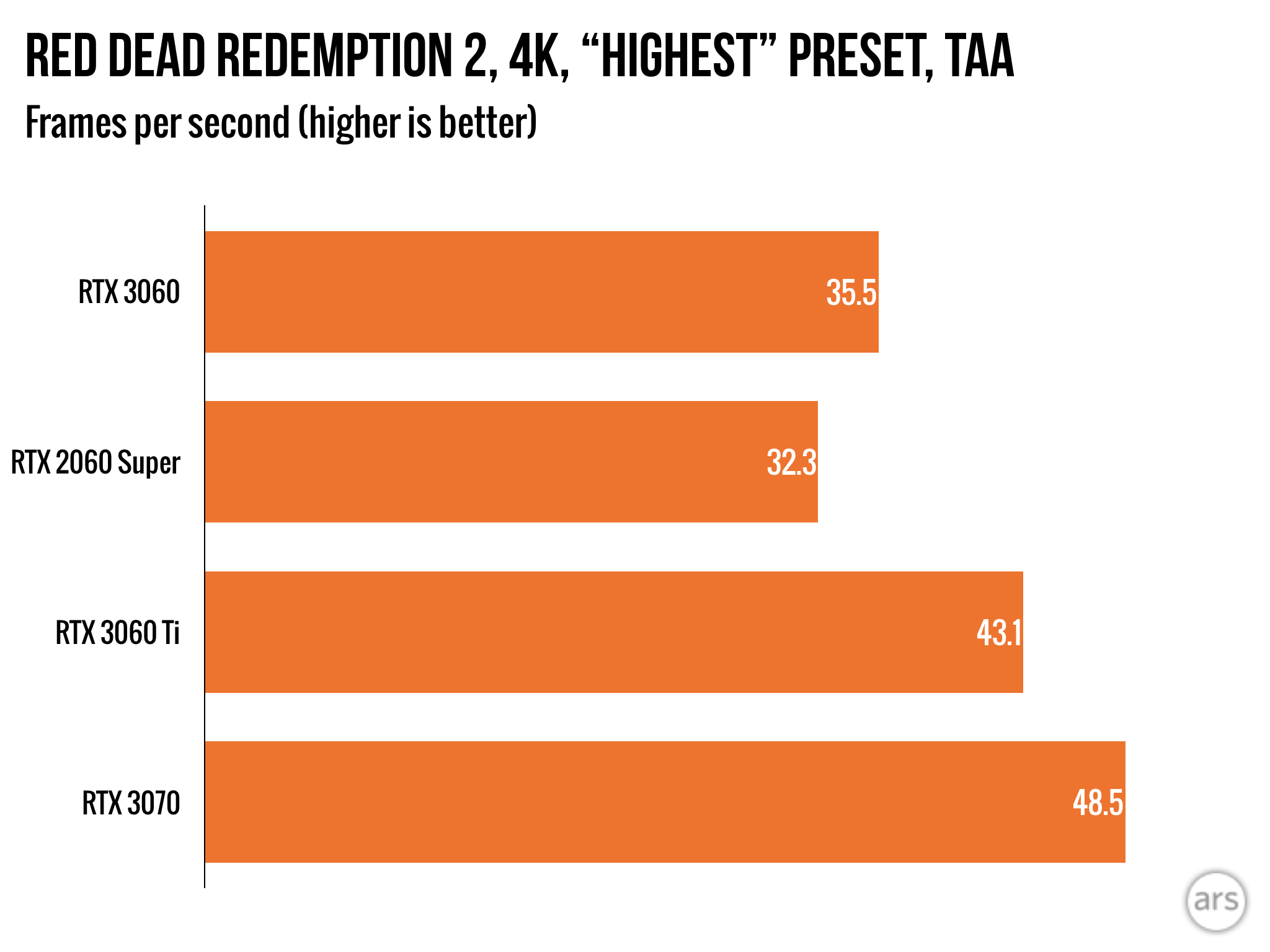

The rest of the gallery's benchmarks do not include ray tracing. A newer Rockstar game benefits more from the newer GPU.

A newer Rockstar game benefits more from the newer GPU. The older RTX 2060 Super wins this benchmark battle with an older Rockstar game.

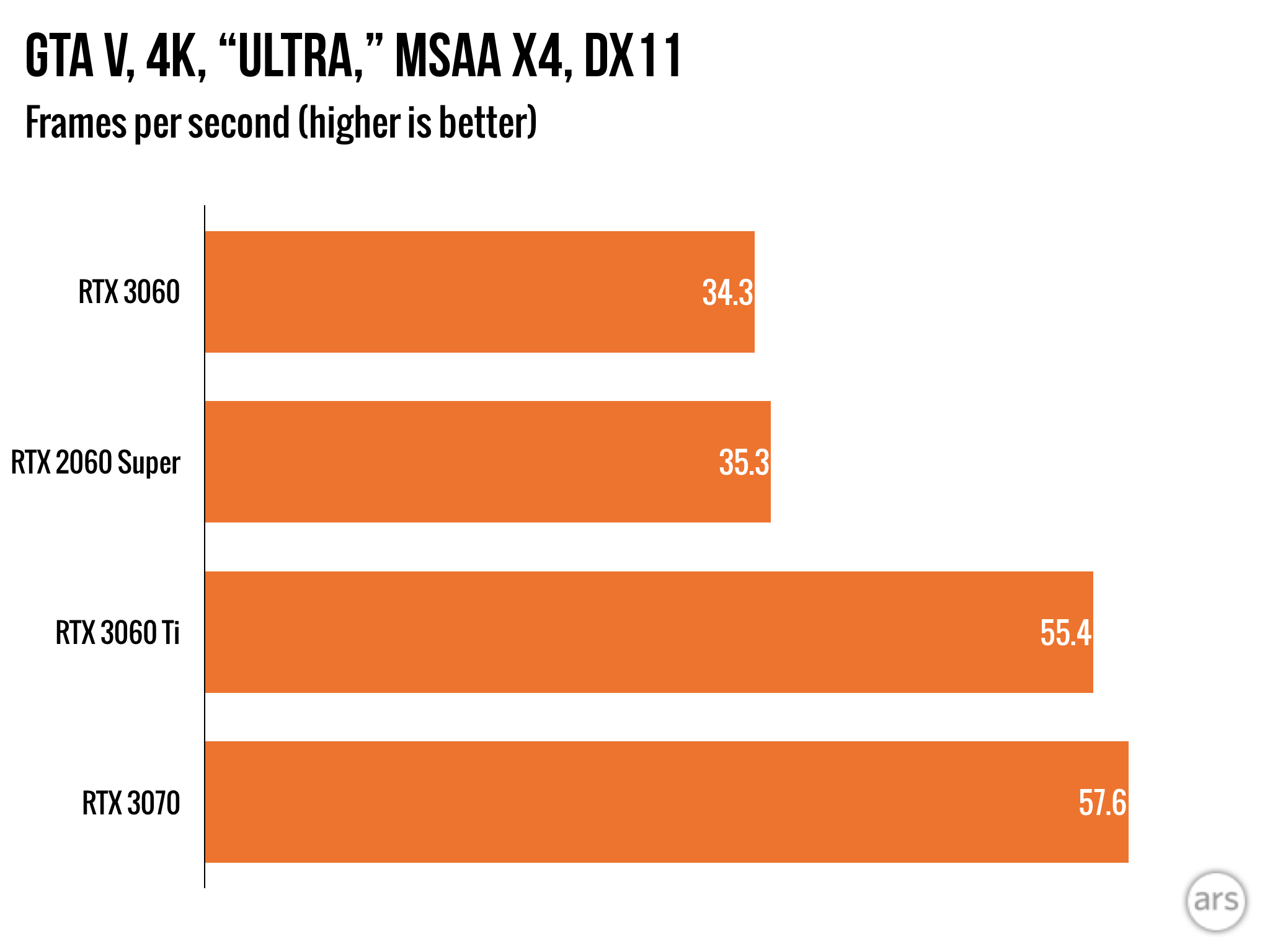

The older RTX 2060 Super wins this benchmark battle with an older Rockstar game. Comparable results in Novigrad, but the older 2060 Super wins.

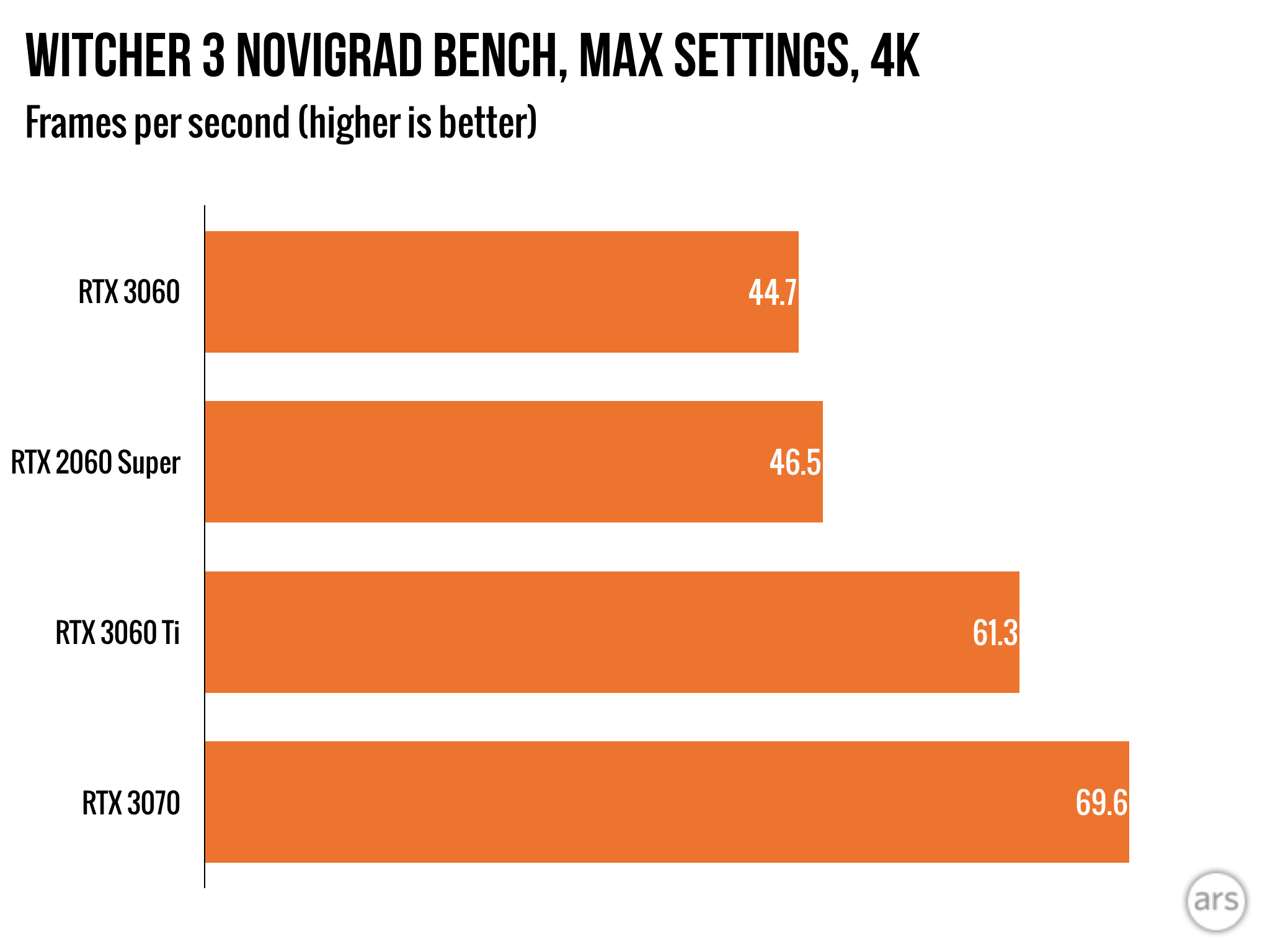

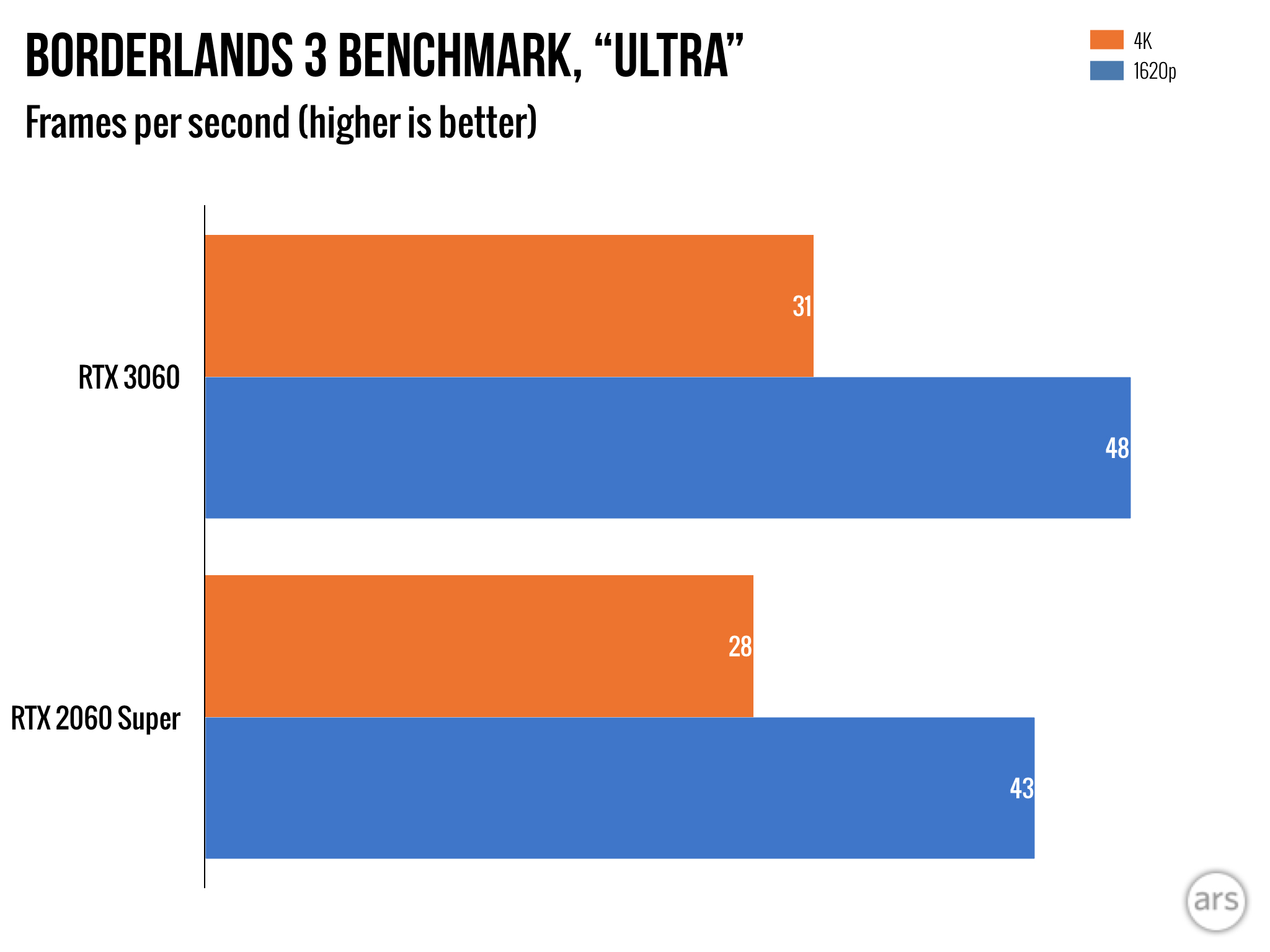

Comparable results in Novigrad, but the older 2060 Super wins. Borderlands 3 has recently joined our testing suite, and I'll beef up its benchmark numbers for other GPUs in reviews to come.

Borderlands 3 has recently joined our testing suite, and I'll beef up its benchmark numbers for other GPUs in reviews to come.

All above benchmarks were conducted on my standard Ars testing rig, which sports an i7-8700K CPU (overclocked to 4.6GHz), 32GB DDR4-3000 RAM, and a mix of a PCI-e 3.0 NVMe drive and standard SSDs.

My first question mark came when I saw the RTX 2060 Super beat the RTX 3060 in a variety of 3DMark benchmarks. The older card enjoyed a performance lead as high as 6.5 percent in a straightforward GPU muscle test ("Fire Strike Ultra"), and it even bested the newer card, however marginally, in a head-to-head 3DMark ray-tracing showdown.

With this knowledge in hand, I grabbed an Nvidia benchmark table provided to members of the press, which aligned with most of my other tests—albeit with results that were a bit more charitable to the newer RTX 3060 than my own. Where Nvidia found that The Witcher 3 and Cyberpunk 2077 (ray tracing disabled) were "dead even" between the 3060 and the 2060 Super as tested in 4K resolution, my personal, repeatable benchmarks gave the older card a lead. Grand Theft Auto V, which runs a scant 2.9 percent faster on the 2060 Super in 4K, doesn't appear in Nvidia's provided benchmark table.

But the rest of my tests did show that the RTX 3060 generally clawed ahead in gaming performance. Many of the best results came when a given benchmark included ray-tracing effects, yet even as a pure, old-school rasterization card, it often enjoyed a noticeable 9 percent lead on non-RT tests.

Resolving resolution, with the help of DLSS

As a reminder, Ars Technica focuses its tests on 4K performance, since GPU benchmarks that dip below 4K resolutions do less to clarify the sheer power a GPU will provide to your system. Sure enough, those gains will all likely look even better as you drop raw resolution counts on your preferred PC into 1440p territory. Still, as my benchmarks demonstrate, this isn't necessarily the card you want to throw at 4K resolutions, unless you're content with capping frame rates at 30fps (or playing settings whack-a-mole to get to roughly 45fps, which will look steady enough on variable refresh rate monitors on which 45fps is generally a cutoff for smoothness).

That processing reality makes this GPU's emphasis on 12GB of VRAM a bit fishy. So much VRAM makes sense if you're interested in 4K games with beefy add-on texture packs, meant to show off so many pixels, and I would have loved 12GB of VRAM on the bigger RTX 3000-series cards. But this is the case where a downgrade to 8GB, barely noticeable in 1440p games, would have made more sense on paper—and makes us wonder whether Nvidia was forced into the 12GB fray due to COVID-19 production complications. (We've heard rumblings about smaller RAM capacities being harder to find at scale these days.)

The RTX 3060 also invites players to enjoy the incredible ecosystem that is DLSS, which has since proven a must-toggle option when pushing a game's graphics options to their limits. DLSS' implementation across dozens of games has since sold us on Nvidia's sales pitch: that native signals can upscale from 1080p to 1440p with a remarkable amount of preserved clarity. It's not perfect, but DLSS' balance between smudgy moments and surprisingly crisp details is almost always superior to a game's built-in temporal anti-aliasing (TAA) option, which usually comes with its own ghosting artifacts by default.

The exception remains Death Stranding, whose custom coding of the DLSS standard continues to poorly render "organic" visual elements like rain, foliage, and visible beads of water. At this point, we're convinced Kojima Productions has seen none of Ars' repeated complaints and will leave this issue unresolved—which is a shame, because the game's PC version is otherwise a great example of how DLSS can neatly lift a game into a stable 60fps range.

Additionally, DLSS has yet to become a true industry standard, in spite of Nvidia's apparent "go ahead and use it" liberation of the tech for anyone who's interested. It's a welcome rendering bonus in compatible games, particularly the eye-opening RT implementation in Minecraft, and it's practically required to get Cyberpunk 2077 running at a certain threshold. But some of the PC industry's most demanding fare, particularly Ubisoft's open-world offerings, have yet to join the party; perhaps they're holding out for whatever upscaling mechanism AMD eventually rolls out for its RDNA 2 line of GPUs (which we're still waiting to hear news about).

I point all of this out because it's easy for me to shrug my shoulders at DLSS after years of testing and scrutinizing it. You, on the other hand, may still be newly charmed. Anyone who has held off on upgrading their PC GPU until Nvidia's Ampere line got down to the sub-$400 level should look at the list Nvidia provides of compatible DLSS games and think about whether that sweetens the 1440p deal.

Unproven mining pickaxe

Nvidia's last twist for the RTX 3060 comes with a rare performance downgrade (or "nerf," if you will) for a particular use case: the mining of cryptocurrencies. Or, at least, one of them.

"RTX 3060 software drivers are designed to detect specific attributes of the Ethereum cryptocurrency mining algorithm, and limit the hash rate, or cryptocurrency mining efficiency, by around 50 percent," the company announced in a blog post last week. This came alongside the announcement of an entirely new product line, the Nvidia Cryptocurrency Mining Processor (CMP), slated to launch in "Q1 2021." Nvidia has thus far revealed specs for four discrete SKUs, but not prices, along with clarification that these devices will be designed with performance-minded engineering tricks, particularly the removal of video-out ports to make way for a different cooling airflow path.

Ars Technica covers a fair amount of cryptocurrency news, but as of this review, we have deliberately opted not to create content that teaches people how to get into cryptomining themselves. Among other things, the trend is not great for the environment. Still, since Nvidia has loudly acknowledged the practice by building a nerf into the GPU that we're holding in our hands, I thought I'd give the feature a quick test. After all, the drivers in question were only described as effective against Ethereum. What about other currencies?

In my case, I settled on vertcoin for two reasons: it has a convenient one-click mining app on multiple OSes, which includes a clear "hashing rate" figure that I can easily compare amongst GPUs; and for now, it's seemingly worthless. I mined for approximately 40 minutes in all while testing on four GPUs, and I have already deleted my digital "haul," so if I've just trashed the next millions-strong dogecoin, so be it.

My findings were as follows, measured as vertcoins mined per hour:

- RTX 3080: 9.21

- RTX 3060 Ti: 7.21

- RTX 3060: 2.21

- RTX 2060 Super: 3.44

All of these tests included GPU downclocks, VRAM upclocks, and power-level adjustments (as tweaked within MSI Afterburner) to keep the GPUs in question operating at roughly 65°C, as I'm not trying to fry this hardware to make a few fake bucks. The exception came from the lowest performer on my list, the apparently nerfed RTX 3060, which I couldn't tweak to run any higher than 55°C, try as I might.

To be clear, this is a painfully anecdotal example of how the brand-new RTX 3060 slots into the cryptomining ecosystem. What are the 3060's Ethereum rates? What about other cryptomining solutions that use wildly different algorithms—and reportedly skirt Nvidia's driver-side solution? And how easy might it be to edit Nvidia's drivers (or repurpose older ones) to make the RTX 3060 pump out a few more vertcoin per hour? We can't say. But something is going on with the RTX 3060 and its default Nvidia drivers, and based on specs, it stands to reason that this rate is half the GPU's actual mining capabilities.

Verdict: It's fine! But...

Really, the worst thing I can say about the RTX 3060 is that it's not as tantalizing a jump from the 2060 as other "plus-1,000" RTX cards have proven over the past six months. The powerful $799 RTX 3080 rendered the famously expensive RTX 2080 Ti moot, while the RTX 3070 showed up at a fair $499 price with a serious leg up on the 2070 Super (and was honestly comparable to the RTX 2080 Ti). Heck, the RTX 3060 Ti already compared well to the RTX 2060 Super, leaving this latest card in a funky comparison position.

Instead, you have to peck through benchmarks and lists to find ideal examples of games where the newer card beats its 2060 Super predecessor... or make your peace with a less-astounding performance jump and a possible price savings, should we get a belated Christmas miracle in the form of stable GPU stock. We're not holding our breath, but if fewer drool-worthy specs and limited crypto performance change the scalping market for this GPU, that may be its own Christmas in February.

https://ift.tt/3pRBaT2

Technology

Bagikan Berita Ini

0 Response to "Nvidia RTX 3060 review: A fine $329 GPU, but ho-hum among the 3000 series - Ars Technica"

Posting Komentar